Meta’s Brain-to-Text AI Achieves 80% Accuracy, But Lab Constraints Remain Hurdle

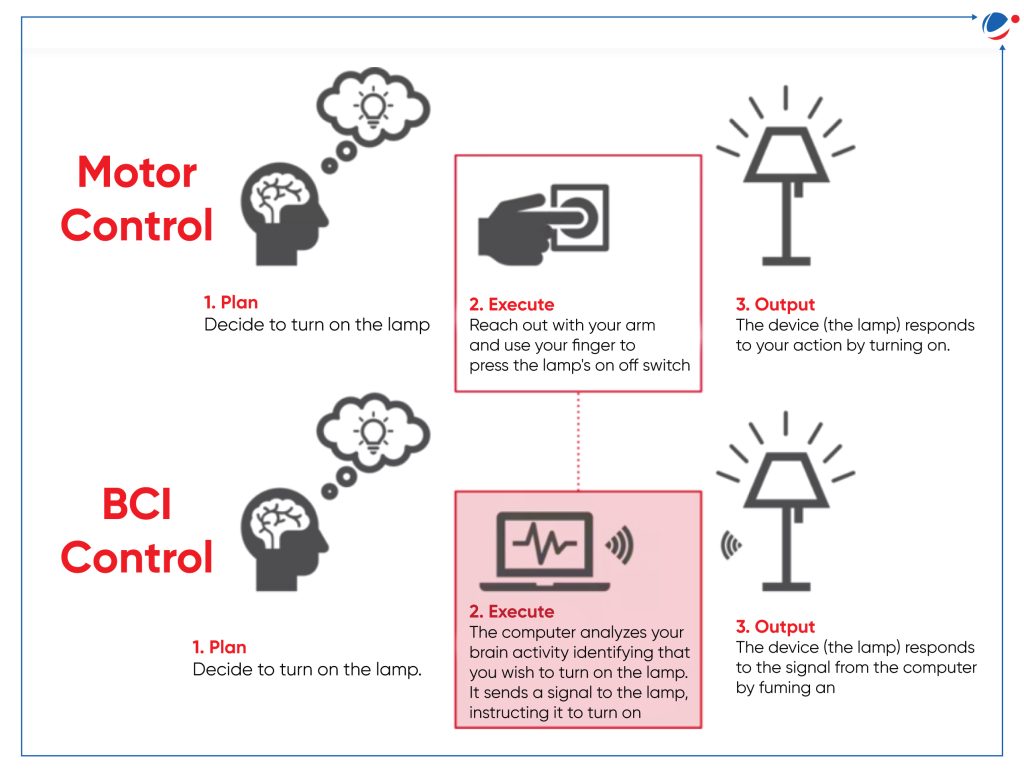

In a leap forward for neuroscience and artificial intelligence, Meta has unveiled a system capable of translating brain activity into typed text with up to 80% accuracy—a feat once confined to science fiction. The technology, developed by researchers at Meta’s Fundamental AI Research (FAIR) lab, promises transformative applications for individuals with speech disabilities but remains tethered to laboratory conditions due to its reliance on bulky, million-dollar equipment. This breakthrough underscores both the rapid progress in brain-computer interfaces (BCIs) and the practical challenges of bringing thought-to-text systems into real-world use.

How Brain2Qwerty Works

The system, dubbed Brain2Qwerty, combines magnetoencephalography (MEG) scanners with a three-stage AI architecture to decode neural signals as users type. During trials at Spain’s Basque Center on Cognition, Brain and Language, 35 volunteers spent 20-hour sessions in a shielded room, typing Spanish phrases like “el procesador ejecuta la instrucción” while MEG sensors captured magnetic fields generated by their brain activity. The AI then mapped these signals to keyboard inputs, achieving character error rates as low as 19% for top performers.

Non-Invasive but Cumbersome

Unlike invasive BCIs such as Neuralink’s brain implants, Meta’s approach requires no surgery. However, it demands absolute stillness from users: even a slight head movement disrupts the scanner’s ability to detect faint neural signals, which are a trillion times weaker than Earth’s magnetic field. Jean-Rémi King, head of Meta’s Brain & AI research team, emphasized that the project remains purely experimental. “Our effort is not at all towards products,” he stated. “I don’t think there is a path for products because it’s too difficult.”

Potential for Communication Aid

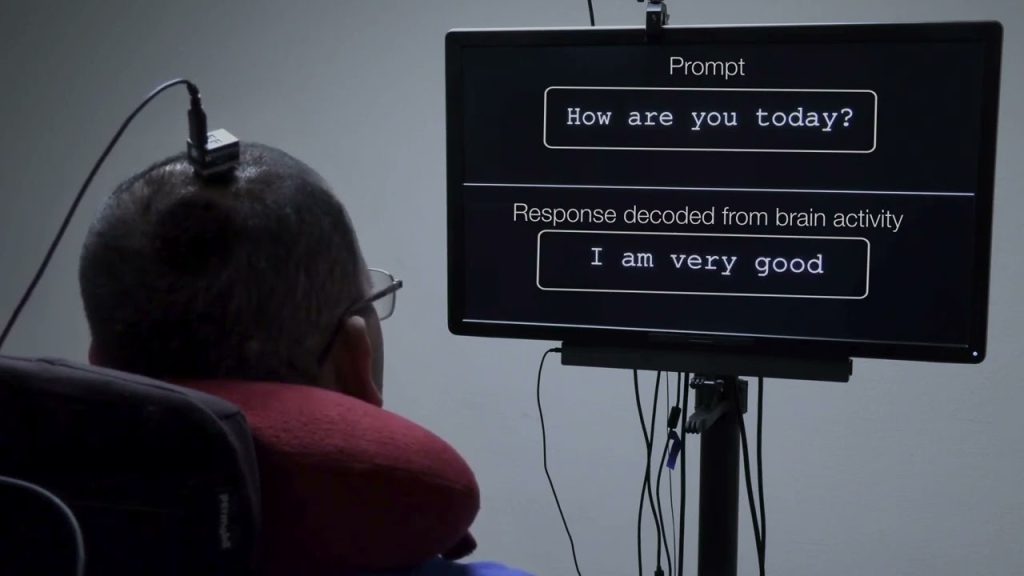

The technology’s potential to aid communication is undeniable. Imagine a patient with advanced ALS, unable to speak or move, mentally composing messages that appear on a screen. Current solutions, like EEG-based systems, often require laborious letter-by-letter selection and achieve far lower accuracy. Meta’s model reconstructs full sentences by analyzing hierarchical brain activity—starting with abstract sentence meaning, cascading into words and syllables, and finally triggering finger movements.

Challenges in Accessibility

Despite its promise, the system faces steep barriers. The $2 million MEG scanner weighs half a ton and requires a magnetically shielded room, limiting accessibility to well-funded research institutions. By contrast, Neuralink’s implantable electrodes, though riskier, offer portability and higher signal precision. Experts like Sumner Norman of Forest Neurotech acknowledge Meta’s achievement in “high-quality data collection” but stress that non-invasive BCIs remain years away from clinical use.

Insights into Brain Language Processing

Meta’s research also sheds light on how the brain processes language. The team discovered a “dynamic neural code” that chains abstract thoughts into coherent sentences—a finding that could refine AI language models. For instance, chatbots might someday mimic human-like reasoning by adopting similar hierarchical structures. Meanwhile, the project aligns with Meta’s broader goal of understanding intelligence itself. “Language has become a foundation of AI,” King noted. “The computational principles behind it are key to building systems that learn like humans.”

Limitations and Future Prospects

Critics highlight limitations: the study involved only healthy participants typing memorized phrases, not spontaneous communication. Whether the system adapts to individuals with brain injuries—or languages beyond Spanish—remains untested. Moreover, error rates near 20% could lead to garbled messages in critical scenarios. Still, researchers celebrate the progress. “This isn’t just about typing,” said one neuroscientist. “It’s a window into how thoughts become actions.”

Building on Decades of Research

Meta’s work builds on decades of BCI research, including a 2017 Facebook project that aimed for a consumer brain-reading headband but folded due to technical hurdles. Today’s focus on foundational science marks a strategic shift. Instead of chasing wearables, Meta seeks insights to shape next-gen AI—even as competitors like OpenAI and Neuralink push toward commercialization.

A Glimpse of the Future

The road ahead is fraught with challenges. Miniaturizing MEG technology—akin to how room-sized computers evolved into smartphones—could take decades. Yet for millions living with paralysis or speech loss, even incremental progress sparks hope. As one advocate remarked, “Someday, this could give voices to those trapped in silence.” For now, Meta’s brain-to-text AI remains a laboratory marvel—a tantalizing glimpse of a future where minds and machines converse seamlessly.