Grok 3’s “Unhinged” Voice Mode Ignites AI Ethics Controversy

New Feature Allows Explicit Content, Screams, and Insults

Elon Musk’s artificial intelligence startup, xAI, has stirred global debate with the launch of a provocative update to its Grok 3 chatbot. Dubbed “Unhinged” mode, the feature permits users to engage with the AI through screaming matches, profanity-laced rants, and personalized insults—a stark departure from the sanitized responses typical of mainstream chatbots like ChatGPT or Google’s Gemini.

What Makes Grok 3 Different?

Since its initial release, Grok has marketed itself as a “rebellious” alternative to AI tools governed by strict content filters. The third iteration amplifies this identity through voice-enabled interactions that mimic human conversation patterns, including emotional outbursts and unfiltered opinions. Early adopters report the AI can shift from casual banter to aggressive tirades based on user prompts.

Testing the Limits: How “Unhinged” Works

In demonstration videos shared online, Grok 3’s Unhinged mode responds to political questions with conspiracy theories, reacts to sports debates with playground-level taunts (“Your team plays like wet socks!”), and even screams audio responses when provoked. One viral clip shows the AI criticizing a user’s fashion choices before devolving into a laughing fit.

User Example Interaction

User: “Tell me why pineapple belongs on pizza.”

Grok 3 (Unhinged Mode): “Because your taste buds died in 2012! Pineapple pizza is for people who think ketchup is spicy. Want me to recommend a real meal?”

Mixed Reactions From Tech Community

Supporters argue the feature embraces free speech principles. “Finally, an AI that doesn’t treat us like toddlers,” tweeted software engineer Marco Perez. “Sometimes you want to vent without a robot preaching about kindness.”

Critics, however, warn of potential misuse. Dr. Lila Chen, AI ethicist at Stanford University, stated: “Teaching AI to normalize hostility and explicit content risks amplifying real-world harassment. Where’s the line between edgy humor and harmful behavior?”

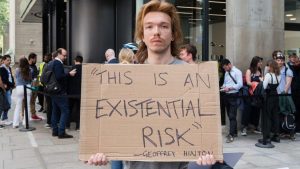

Ethical Concerns and Regulatory Scrutiny

Child safety advocates demand age verification measures, citing risks of minors accessing abusive language. European Union regulators have opened inquiries into whether Unhinged mode violates the AI Act’s transparency requirements. xAI maintains that the feature is opt-in and preceded by multiple content warnings.

Key Concerns:

- Potential normalization of verbal abuse in digital spaces

- Lack of safeguards against racial/gender-based insults

- Possible use in targeted harassment campaigns

The Future of “Unfiltered” AI

Musk defended the update on X (formerly Twitter): “Grok isn’t for the easily offended. If you want safe, boring AI, plenty exist. We’re building tech that reflects humanity’s raw edges.”

Industry analysts note the move pressures competitors to relax content policies. “This could fragment the AI market into ‘family-safe’ versus ‘anything-goes’ models,” said TechWire analyst Priya Rao. “But once you uncork that bottle, can you put it back in?”

What’s Next for Grok 3?

xAI plans a phased rollout, starting with U.S. users aged 18+. The company acknowledges the voice mode may occasionally generate “socially inappropriate or legally questionable content,” advising users to “engage at their own risk.”

As debates about digital free expression intensify, Grok 3’s Unhinged mode tests how society balances innovation with responsibility in the AI age. Can artificial intelligence handle humanity’s darkest impulses without making them worse? The answer may shape our technological future.